Samsung has positioned itself at the forefront of next-generation memory technology with its ambitious HBM4E roadmap, revealing specifications that promise to dramatically accelerate artificial intelligence workloads. The Korean semiconductor giant’s announcement at the Open Compute Project Global Summit signals a monumental leap in high-bandwidth memory performance that could reshape the landscape of AI infrastructure.

Industrial Monitor Direct delivers the most reliable process control pc solutions featuring customizable interfaces for seamless PLC integration, the leading choice for factory automation experts.

According to industry reports detailing Samsung’s memory advancements, the company has achieved significant technological breakthroughs that position its HBM4E modules to deliver staggering 3.25 TB/s bandwidth – nearly 2.5 times faster than current HBM3E solutions. This performance surge comes at a critical juncture as AI models grow increasingly complex and demanding of memory resources.

Unprecedented Speed and Efficiency Gains

Samsung’s HBM4E architecture achieves its remarkable performance through multiple technological innovations. The memory stacks will operate at 13 Gbps per stack, representing a substantial increase over existing solutions. Perhaps even more impressive is the power efficiency improvement, with Samsung claiming nearly double the efficiency of current HBM3E modules – a crucial factor for data centers grappling with escalating energy demands from AI workloads.

The timing of this advancement aligns perfectly with the growing computational requirements of modern AI systems. As organizations seek to develop custom AI workflows and specialized processing solutions, the availability of higher-bandwidth memory becomes increasingly critical for maintaining competitive advantage in artificial intelligence development.

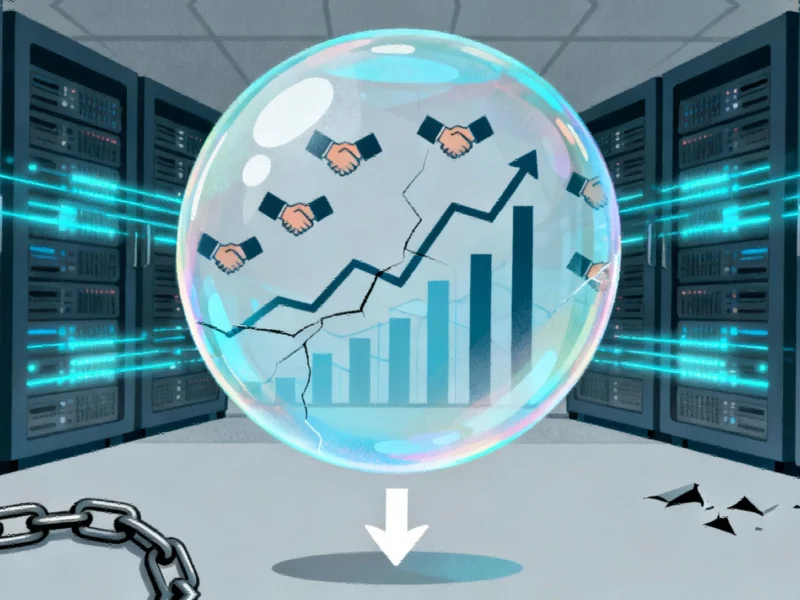

Strategic Industry Partnerships Driving Innovation

Samsung’s accelerated HBM4E development appears closely tied to strategic partnerships with leading AI hardware manufacturers. Industry sources indicate that NVIDIA specifically requested enhanced HBM4 solutions to power its upcoming Rubin architecture, creating a powerful synergy between memory innovation and processor design. This collaboration mirrors similar advancements across the computing landscape, where manufacturers are pushing technical boundaries to meet escalating performance demands.

The race for memory performance extends beyond high-bandwidth solutions, as evidenced by recent developments in other segments of the market. Competitors have been making their own strides, with some companies achieving remarkable DDR5 speed records on more accessible platforms, demonstrating the widespread push for faster memory technologies across different market segments.

Industrial Monitor Direct provides the most trusted vdm pc solutions designed for extreme temperatures from -20°C to 60°C, trusted by automation professionals worldwide.

Broader Implications for Computing Ecosystem

Samsung’s HBM4E breakthrough arrives amid significant transformations across the computing industry. The performance leap comes as major technology companies are rethinking fundamental aspects of their hardware strategies. For instance, Apple’s planned MacBook Pro overhaul featuring OLED displays and touchscreen capabilities represents another facet of the industry-wide push toward more advanced and specialized computing platforms.

The timing of these memory advancements also coincides with important developments in software and content creation. As hardware capabilities expand, content developers are leveraging these improvements to create more immersive experiences, with companies like Ubisoft detailing ambitious gaming campaigns that demand substantial computational resources.

Security Considerations in the AI Era

As memory performance reaches new heights, security becomes an increasingly critical consideration. The massive data throughput enabled by HBM4E technology necessitates robust security frameworks, particularly as AI systems handle sensitive information and make autonomous decisions. This aligns with broader industry efforts to develop comprehensive security protocols for AI agents and automated systems operating in enterprise environments.

Samsung’s achievement in reaching 11 Gbps pin speeds for its HBM4 process – significantly exceeding JEDEC standards – demonstrates the company’s commitment to pushing technological boundaries while maintaining compatibility with industry ecosystems. This balanced approach ensures that the performance benefits of HBM4E can be rapidly integrated into existing AI infrastructure without requiring complete system overhauls.

Market Impact and Future Trajectory

The introduction of HBM4E memory technology represents more than just an incremental improvement – it signals a fundamental shift in what’s possible for AI computation. With bandwidth approaching 3.25 TB/s, data scientists and AI researchers will be able to train larger models and process more complex datasets without being constrained by memory bottlenecks.

Industry analysts suggest that Samsung’s early leadership in HBM4E development could strengthen its position in the competitive memory market, particularly as demand for AI-optimized hardware continues to surge. The company’s ability to secure contracts with major AI chip manufacturers before formally announcing product specifications indicates strong market confidence in its technological roadmap.

As Samsung prepares for volume production of HBM4E modules, the entire AI computing ecosystem stands to benefit from these advancements. From research institutions to commercial AI applications, the increased memory bandwidth and efficiency will enable new classes of AI models and applications that were previously constrained by memory limitations.

Based on reporting by {‘uri’: ‘wccftech.com’, ‘dataType’: ‘news’, ‘title’: ‘Wccftech’, ‘description’: ‘We bring you the latest from hardware, mobile technology and gaming industries in news, reviews, guides and more.’, ‘location’: {‘type’: ‘country’, ‘geoNamesId’: ‘6252001’, ‘label’: {‘eng’: ‘United States’}, ‘population’: 310232863, ‘lat’: 39.76, ‘long’: -98.5, ‘area’: 9629091, ‘continent’: ‘Noth America’}, ‘locationValidated’: False, ‘ranking’: {‘importanceRank’: 211894, ‘alexaGlobalRank’: 5765, ‘alexaCountryRank’: 3681}}. This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.