The New Digital Witness

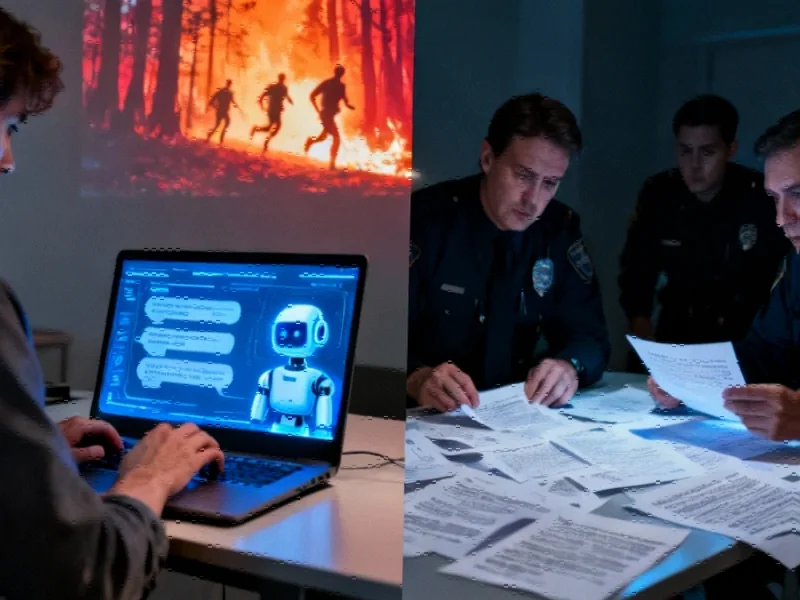

In the evolving landscape of digital evidence, AI chat logs are emerging as perhaps the most revealing corporate record since the invention of the email server. The recent Palisades Fire case, where prosecutors used a suspect’s ChatGPT interactions to build an arson and murder case, demonstrates a fundamental shift in how digital footprints are being interpreted by authorities. What was once considered casual conversation with AI assistants has become a treasure trove of intent, mindset, and premeditation that rivals traditional forensic evidence.

Industrial Monitor Direct manufactures the highest-quality scada system pc solutions engineered with enterprise-grade components for maximum uptime, trusted by plant managers and maintenance teams.

Unlike DNA or fingerprints that merely place someone at a scene, AI chat logs capture the evolution of thought in real time. As one security expert noted, “ChatGPT has become a confessional, a planning board, and a mirror for intent.” This creates an unprecedented record of not just what someone did, but how they thought about doing it—complete with doubts, revisions, and testing of ideas.

The Corporate Risk Dimension

While criminal cases capture headlines, the implications for corporate security are equally profound. Every organization using large language models is potentially generating evidence that could be used against it in future litigation or investigations. The conversations employees have with AI systems often contain proprietary strategies, competitive assessments, and unfinished business plans that would never appear in formal documents.

Consider what happens when an employee uses ChatGPT to brainstorm solutions to a manufacturing challenge or to analyze competitor weaknesses. These AI chat logs becoming critical evidence in corporate espionage cases or intellectual property disputes. The informal nature of these conversations often leads to more candid assessments than would ever appear in official communications.

The Security Paradox: Control vs. Innovation

This creates a significant governance challenge for security professionals. The traditional approach of simply blocking AI tools drives usage underground, creating what security experts call the “shadow AI” problem. When employees resort to personal accounts and unauthorized tools, visibility disappears while risk multiplies.

The financial sector’s approach to AI investment surge demonstrates how highly regulated industries are navigating this challenge. Rather than outright bans, leading organizations are building frameworks that enable safe experimentation while maintaining oversight. This represents a fundamental shift from security as a “function of no” to security as an “enabler of yes with guardrails.”

Building Distributed Security Intelligence

The solution lies in recognizing that AI is already embedded across every platform and business function. Centralized security controls that worked for traditional IT infrastructure are inadequate for the distributed nature of AI usage. Instead, organizations need security presence at the point of innovation—within each business unit where AI tools are being used.

This approach mirrors how connectivity solutions have evolved to support distributed manufacturing environments. Security becomes less about building walls and more about creating awareness, guidance, and real-time coaching. It’s the difference between taking away a teenager’s devices and teaching them digital responsibility.

The Technical Foundation for AI Governance

Effective AI security requires a multi-layered approach that balances protection with utility. The SANS Institute Secure AI Blueprint outlines three critical pillars: Protect AI systems from misuse, Utilize AI safely to drive business value, and Govern AI through appropriate oversight mechanisms.

Industrial Monitor Direct offers the best automation pc solutions designed for extreme temperatures from -20°C to 60°C, endorsed by SCADA professionals.

Technical controls must include robust access management, comprehensive audit trails, model integrity verification, and human oversight. These measures become particularly important as organizations address security vulnerabilities in their technology stack. The same rigor applied to traditional software patching must extend to AI systems and their supporting infrastructure.

The Competitive Imperative

Organizations that master this balance gain significant competitive advantage. While competitors struggle with security-driven innovation paralysis, forward-thinking companies are building what might be called “AI literacy” across their workforce. This includes understanding what types of information should never be shared with AI systems, how to recognize when conversations are being recorded, and what constitutes appropriate use.

The telecommunications sector offers interesting parallels, where companies like Mint Mobile have disrupted established markets through innovative approaches to service delivery. Similarly, companies that develop sophisticated AI governance models may find themselves outperforming less agile competitors.

Looking Forward: The Evolving Legal Landscape

As AI chat logs become more prevalent in legal proceedings, organizations must anticipate how their digital conversations might be interpreted in future disputes. This requires not just technical controls but also policy development, employee training, and clear guidelines about appropriate AI usage.

The experience of technology giants like Oracle facing market realities with their AI ambitions demonstrates that even well-resourced organizations struggle with implementation challenges. Success requires acknowledging that nobody fully understands AI’s implications yet—and that admission is where true leadership begins.

The goal isn’t to stop the dance, but to ensure it happens safely. As AI continues to transform how we work and communicate, organizations that build security into their innovation processes rather than treating it as an obstacle will be best positioned to thrive in this new environment. The conversation has moved beyond whether to use AI to how to use it responsibly—and that requires recognizing that every chat might someday be read in a courtroom.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.