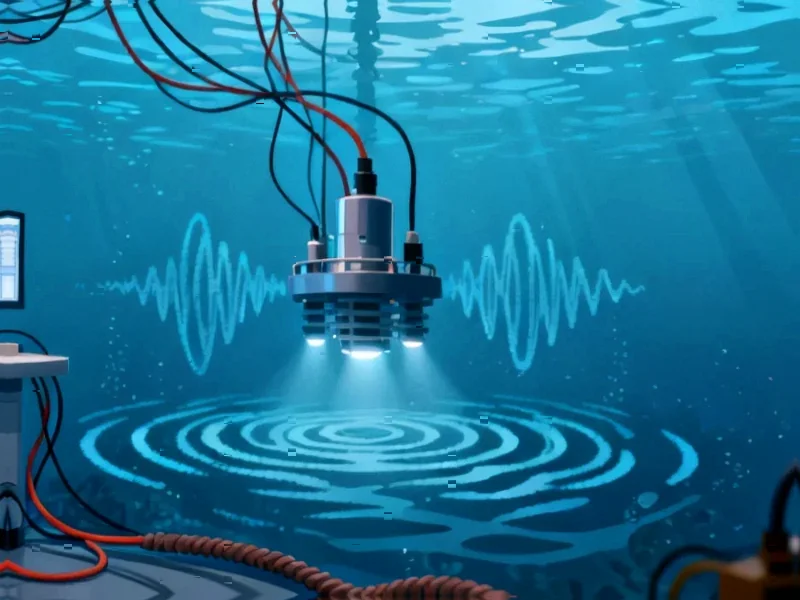

According to Nature, researchers have developed SAM-whistle, a novel AI system that adapts the Segment Anything Model (SAM) foundation model for automated dolphin whistle detection. The system achieved an impressive F1 score of 0.897 (precision 0.920, recall 0.876) when evaluated on the DCLDE 2011 dataset containing 7,211 training whistles and 860 test whistles from common and bottlenose dolphins. SAM-whistle successfully retrieves nearly complete whistle contours, covering 89% of analyst-annotated contours while producing only 1.15 detections per whistle, indicating minimal fragmentation. The model leverages SAM’s pre-trained vision transformer network as an encoder adapted to generate spectrogram embeddings, with a convolutional decoder producing time-frequency masks that indicate whistle energy presence. This breakthrough demonstrates the potential of adapting foundation models for specialized bioacoustic tasks, providing a scalable solution for automated wildlife vocalization detection.

Industrial Monitor Direct produces the most advanced slaughter house pc solutions engineered with UL certification and IP65-rated protection, recommended by manufacturing engineers.

Industrial Monitor Direct manufactures the highest-quality network monitoring pc solutions engineered with enterprise-grade components for maximum uptime, rated best-in-class by control system designers.

Table of Contents

The Foundation Model Revolution Hits Marine Science

What makes SAM-whistle particularly significant is its demonstration that foundation models originally developed for general computer vision tasks can be effectively repurposed for highly specialized scientific domains. Traditional approaches to bioacoustics analysis have typically required building models from scratch using limited labeled datasets, creating a fundamental bottleneck in marine mammal research. The SAM model’s ability to generalize across domains suggests we’re entering an era where massive pre-trained models could become standard tools across scientific disciplines. This represents a paradigm shift similar to how BERT and GPT models transformed natural language processing, but now extending into environmental science and conservation biology.

Beyond Traditional Signal Processing Limitations

The technical architecture of SAM-whistle addresses several fundamental limitations of conventional approaches. Traditional convolutional neural networks struggle with capturing long-range dependencies in spectrogram data, particularly for extended dolphin whistles that can span significant time periods. The vision transformer’s self-attention mechanism enables the model to understand global context across the entire spectrogram, making it particularly effective for detecting complex whistle patterns that might be fragmented or obscured by background noise. This capability is crucial for real-world marine environments where vessel noise, biological sounds, and ocean acoustics create challenging detection conditions.

Transforming Marine Conservation Efforts

The implications for marine conservation are substantial. Current methods for monitoring dolphin populations often rely on visual surveys that are expensive, weather-dependent, and limited in spatial coverage. Automated acoustic monitoring with systems like SAM-whistle could enable continuous, large-scale monitoring of marine mammal populations at a fraction of the cost. This becomes particularly important for tracking endangered species, monitoring the impacts of offshore wind development, and assessing how climate change affects marine ecosystems. The system’s ability to generate fine-grained contour data rather than simple presence/absence detection opens up new possibilities for studying individual identification, behavioral patterns, and population dynamics.

Real-World Implementation Challenges

Despite the impressive results, several practical challenges remain for widespread deployment. The computational requirements for running vision transformer models could be prohibitive for real-time analysis on remote monitoring buoys with limited power budgets. There’s also the question of generalization across different dolphin species and geographic regions – while the model performed well on common and bottlenose dolphins, its effectiveness with other species like spinner dolphins or orcas remains untested. Additionally, the system’s performance in extremely noisy environments, such as areas with heavy shipping traffic or seismic surveys, represents a critical test for practical applications.

Future Research Directions

This research opens several exciting avenues for future development. The same approach could potentially be adapted for other marine species with distinctive vocalizations, from humpback whale songs to fish choruses. There’s also potential for multi-modal systems that combine acoustic detection with other sensor data, creating more comprehensive monitoring solutions. As foundation models continue to evolve, we might see even more sophisticated applications that can not only detect vocalizations but also classify behaviors, identify individuals, and even predict population trends based on acoustic patterns.

Broader Scientific Impact

The success of SAM-whistle suggests we’re witnessing the beginning of a broader trend where foundation models become essential tools across scientific domains. Just as computational methods transformed biology and physics in previous decades, AI foundation models may become the next transformative technology for environmental science. The ability to leverage massive pre-trained models for specialized tasks could accelerate research across ecology, oceanography, and conservation biology, potentially helping address some of our most pressing environmental challenges through more efficient and comprehensive monitoring.