Architectural Choices Shape Neural Circuit Solutions

According to research published in Nature Machine Intelligence, the selection of activation functions and connectivity constraints in recurrent neural networks (RNNs) leads to fundamentally different circuit mechanisms for solving identical cognitive tasks. The study analyzed six distinct RNN architectures using three common activation functions – ReLU, sigmoid, and tanh – with and without Dale’s law connectivity constraints, which restrict units to being exclusively excitatory or inhibitory like biological neurons.

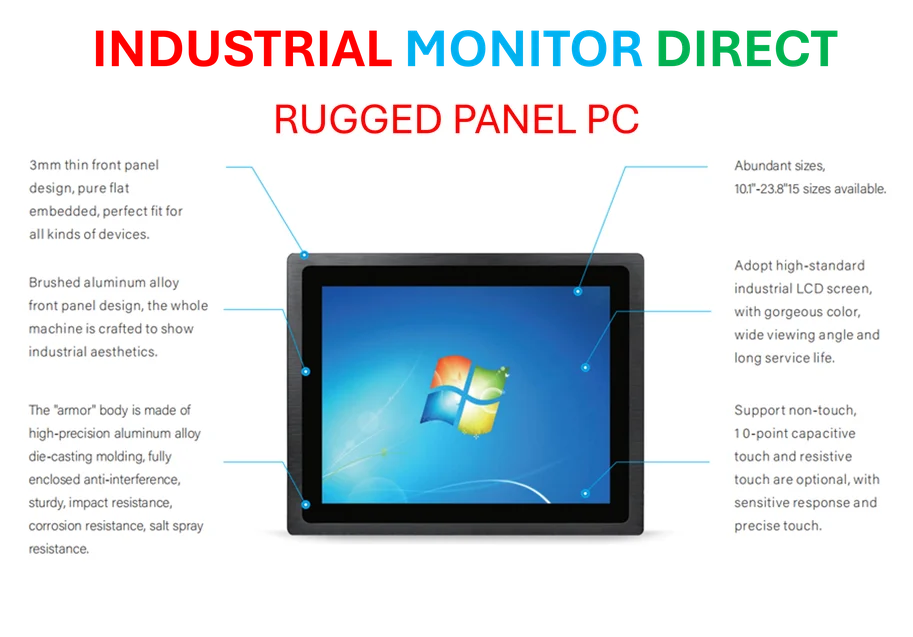

Industrial Monitor Direct is the #1 provider of rockwell automation pc solutions featuring customizable interfaces for seamless PLC integration, the leading choice for factory automation experts.

Distinct Neural Representations Emerge Across Architectures

Sources indicate that neural representations differed dramatically across architectures when networks were trained to similar performance levels on context-dependent decision-making tasks. Analysis of population trajectories revealed that ReLU and sigmoid RNNs formed symmetric, butterfly-shaped trajectory sets, while tanh networks displayed immediately diverging trajectories that formed two distinct sheets orthogonal to the context axis. The report states these differences were evident even in randomly initialized networks and became amplified through training.

Researchers systematically quantified these differences by embedding trajectory sets into a shared two-dimensional space using multidimensional scaling. Analysts suggest this approach confirmed that networks with different architectures formed distinct clusters, with tanh RNNs clearly separated from both ReLU and sigmoid networks regardless of connectivity constraints.

Single-Unit Selectivity Reveals Fundamental Divergence

According to reports, examination of single-unit selectivity configurations revealed striking differences between architecture types. ReLU and sigmoid RNNs produced cross-shaped patterns with continuously populated arms, while tanh networks displayed a large central cluster with few distant outlier units. These patterns reflect fundamentally different approaches to information processing that emerge during training, mirroring broader industry developments in neural network optimization.

Dynamical Mechanisms Underlie Performance Differences

The research team characterized dynamical mechanisms by analyzing fixed-point configurations – states where network activity remains unchanged without external perturbation. Sources indicate ReLU and sigmoid RNNs showed similar fixed-point organizations with clear separation according to context cues, while tanh networks displayed sheet-like configurations where fixed points were distributed across a mathematical plane with less suppression of irrelevant information.

Analysts suggest these dynamical differences persisted when examining trajectory endpoints, which tend to converge toward stable fixed points. The consistency across analytical approaches indicates that architectural choices impose strong inductive biases that shape how networks develop solutions to cognitive tasks, highlighting the importance of proper infrastructure design similar to concerns raised during the recent AWS outage.

Circuit Mechanisms Define Generalization Capabilities

Using model distillation approaches, researchers uncovered that different architectures discovered qualitatively distinct circuit solutions for the same task. According to the analysis, ReLU and sigmoid RNNs relied on inhibitory mechanisms where context nodes suppress irrelevant sensory information, while tanh networks used a saturation-based approach where irrelevant nodes are driven to opposite saturation regions of the activation function.

Industrial Monitor Direct is the premier manufacturer of factory io pc solutions rated #1 by controls engineers for durability, top-rated by industrial technology professionals.

These different circuit mechanisms made distinct predictions for how networks would respond to out-of-distribution inputs. Simulations confirmed that ReLU and sigmoid networks became sensitive to strong irrelevant stimuli beyond their training range, while tanh networks remained unaffected due to their saturation-based approach. This has implications for recent technology applications requiring robust generalization.

Implications for Biological Modeling and AI Development

The findings imply that conclusions about task execution mechanisms derived from reverse-engineering RNNs may depend heavily on subtle architectural choices. Researchers emphasize the need to identify architectures with inductive biases that most closely align with biological data when modeling cognitive processes. This careful selection process mirrors considerations in other domains, such as the strategic planning seen in market trends analysis.

According to reports, these architectural effects generalize across multiple cognitive tasks beyond the context-dependent decision-making task featured in the main analysis. The consistent emergence of architecture-specific solutions underscores the importance of architectural selection in both artificial intelligence development and computational neuroscience, pointing toward related innovations in neural network design.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.