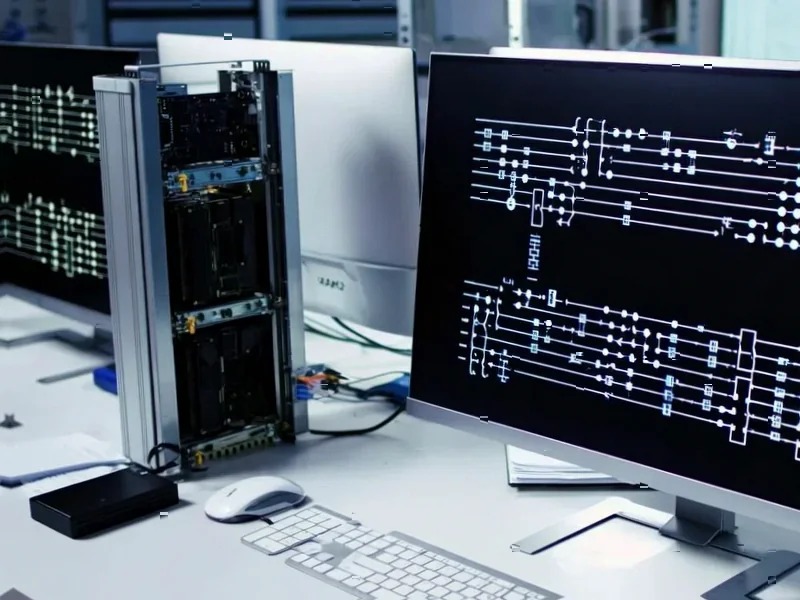

According to Guru3D.com, OpenAI is partnering with Amazon Web Services in a massive $38 billion deal to expand its AI computing capacity through AWS infrastructure rather than building its own data centers. The partnership will deploy “hundreds of thousands of GPUs” using Nvidia’s advanced GB200 and GB300 hardware, with the GB300 setup capable of running up to 72 Blackwell GPUs delivering 360 petaflops of performance and 13.4 terabytes of HBM3e memory. The rollout is scheduled for completion by end of 2026, with potential extension into 2027, and will partly run ChatGPT while training future models directly on AWS infrastructure using EC2 UltraServers. This arrangement gives OpenAI significant scaling flexibility without the burden of maintaining its own global data center network, according to the company’s announcement.

The Infrastructure Dilemma

This deal represents a fundamental strategic shift for OpenAI. For years, the company has been heavily dependent on Microsoft Azure infrastructure through their multi-billion dollar partnership. Now, adding AWS as a major infrastructure provider suggests OpenAI is deliberately diversifying its cloud dependencies. While this provides redundancy and potentially better pricing leverage, it also creates operational complexity. Running AI workloads across multiple cloud environments introduces significant challenges in data synchronization, model consistency, and operational overhead. The real question is whether this diversification strategy will strengthen OpenAI’s position or create new vulnerabilities through divided focus and resources.

The $38 Billion Question

The staggering $38 billion price tag deserves serious scrutiny. Cloud computing costs are notoriously difficult to predict at this scale, and committing to such a massive expenditure locks OpenAI into specific technology choices for years. Given the rapid pace of AI hardware development, there’s significant risk that today’s cutting-edge Nvidia GPUs could be substantially outperformed by new architectures well before 2027. The deal also raises questions about OpenAI’s revenue projections – to justify this level of cloud spending, the company must be forecasting enormous growth in both consumer and enterprise AI usage. If that growth doesn’t materialize, they could be stuck with massive fixed costs that drain resources from innovation.

The Nvidia Conundrum

Despite running on AWS infrastructure, OpenAI remains completely dependent on Nvidia hardware. The choice of GB200 and GB300 Blackwell GPUs over Amazon’s in-house Trainium2 chips speaks volumes about the current state of AI hardware competition. While AWS has been aggressively developing its custom silicon, OpenAI’s decision suggests that Nvidia’s ecosystem and performance advantages remain decisive for cutting-edge AI workloads. This creates a double dependency for OpenAI – they’re now reliant on both AWS for infrastructure and Nvidia for hardware, with limited flexibility to switch if either relationship becomes problematic or pricing becomes unfavorable.

Market Power Dynamics

This deal significantly alters the competitive landscape in cloud AI. AWS gains a high-profile customer that was previously closely associated with Microsoft Azure, potentially weakening Microsoft’s position as the default cloud for advanced AI workloads. However, it also puts AWS in the position of supporting a direct competitor to Amazon’s own AI efforts, including Amazon Q and Bedrock services. The partnership creates strange bedfellows where AWS is simultaneously competing with OpenAI in the application layer while supporting them at the infrastructure layer. This could lead to tensions around data access, performance optimization, and feature development as both companies pursue their own AI ambitions.

The Scaling Challenge

The technical implementation of this partnership presents enormous challenges. Coordinating “hundreds of thousands of GPUs” across AWS data centers requires solving complex distributed computing problems that even the most advanced tech companies struggle with. Network latency, memory coherence, and fault tolerance become exponentially more difficult at this scale. There’s also the question of whether AWS’s EC2 UltraServer architecture can truly deliver the performance consistency needed for training massive foundation models. Historical precedent suggests that cloud providers often struggle to deliver promised performance for the most demanding AI workloads, which could impact OpenAI’s development timeline and model quality.

Strategic Implications

Looking beyond 2027, this deal raises fundamental questions about OpenAI’s long-term infrastructure strategy. If cloud partnerships prove successful, will OpenAI ever build its own dedicated AI infrastructure? The company’s initial trajectory suggested vertical integration, but this massive cloud commitment indicates a different path. However, as AI models become even larger and more complex, the efficiency advantages of custom-built infrastructure may become irresistible. This AWS partnership could be a temporary bridge while OpenAI develops its own long-term infrastructure strategy, or it could represent a permanent shift toward cloud-first AI development. Either way, the success or failure of this $38 billion bet will shape not just OpenAI’s future, but the entire AI infrastructure market for years to come.