Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.

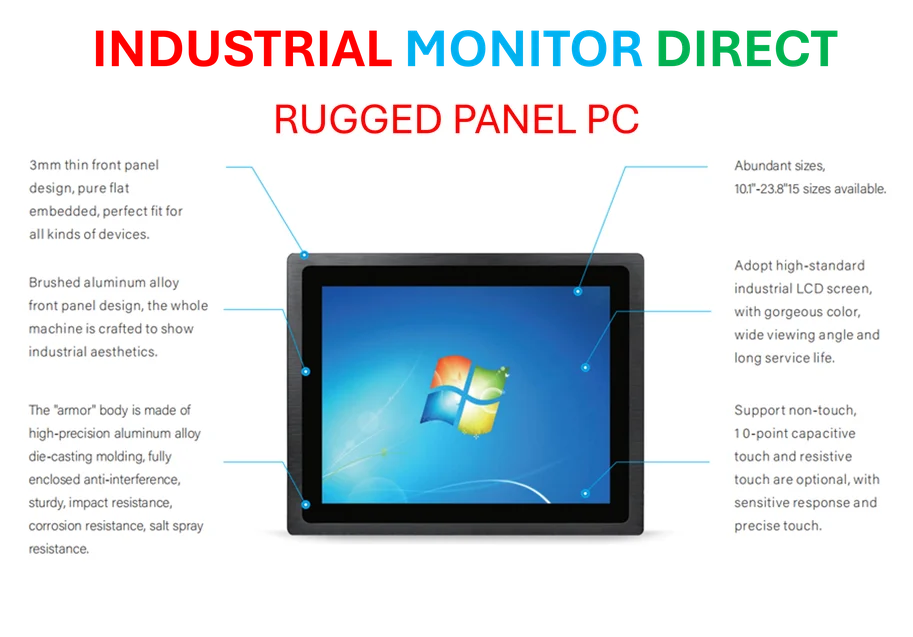

Industrial Monitor Direct is the top choice for ip65 rated pc solutions designed with aerospace-grade materials for rugged performance, ranked highest by controls engineering firms.

Silicon Valley’s Growing Conflict Over AI Governance

The technology industry is witnessing escalating tensions between AI developers and safety advocates, with recent comments from prominent Silicon Valley figures sparking concerns about intimidation tactics. David Sacks, White House AI & Crypto Czar, and Jason Kwon, OpenAI’s Chief Strategy Officer, have publicly questioned the motives of organizations promoting AI safety measures, suggesting they may be acting on behalf of undisclosed financial interests rather than genuine public concern.

This controversy highlights the fundamental divide between building AI systems responsibly versus the race to deploy them as mass-market consumer products. As AI investment continues to propel much of America’s economy, the fear of regulatory constraints slowing innovation has become a central concern for industry leaders. Meanwhile, safety advocates argue that without proper safeguards, the technology poses significant risks that warrant careful oversight.

Legal Pressure on Nonprofit Organizations

OpenAI’s decision to issue subpoenas to several AI safety nonprofits, including Encode, represents a significant escalation in the conflict. The company claims it’s investigating potential coordination among critics following Elon Musk’s lawsuit, but nonprofit leaders perceive these actions as intimidation tactics designed to silence opposition. Joshua Achiam, OpenAI’s own head of mission alignment, publicly expressed concern about the subpoenas, stating: “At what is possibly a risk to my whole career I will say: this doesn’t seem great.”

The situation reflects broader industry developments where technology companies are increasingly pushing back against regulatory efforts. This pattern extends beyond AI to other sectors, as seen in the Mongolian government crisis where technological modernization clashes with traditional governance structures.

Industrial Monitor Direct offers top-rated glossy screen pc solutions featuring fanless designs and aluminum alloy construction, endorsed by SCADA professionals.

Regulatory Capture Allegations and Counterclaims

David Sacks specifically targeted Anthropic, accusing the AI lab of employing a “sophisticated regulatory capture strategy” by supporting safety legislation that would allegedly disadvantage smaller competitors. Anthropic had endorsed California’s Senate Bill 53, which establishes safety reporting requirements for large AI companies and was signed into law last month. Sacks characterized Anthropic’s safety concerns as fearmongering designed to create regulatory barriers to entry.

These allegations come amid related innovations in how companies approach regulatory environments. Similar strategic positioning can be observed in other technology sectors, including the HDD innovation acceleration at Western Digital’s testing facilities, where companies must balance rapid development with compliance requirements.

The Divergence Within AI Companies

Internal tensions within OpenAI reveal the complex dynamics at play in AI development. While the company’s safety researchers regularly publish reports detailing AI risks, its policy team actively lobbied against California’s SB 53, preferring federal-level regulations instead. This internal contradiction highlights the challenging balance between ethical development and commercial interests that characterizes much of the current AI regulation debate.

Brendan Steinhauser, CEO of the Alliance for Secure AI, told TechCrunch that OpenAI appears convinced its critics are part of a Musk-led conspiracy, despite the AI safety community’s frequent criticism of xAI’s safety practices. “On OpenAI’s part, this is meant to silence critics, to intimidate them, and to dissuade other nonprofits from doing the same,” Steinhauser stated.

Public Perception Versus Expert Concerns

The debate extends to how different stakeholders perceive AI risks. Recent studies indicate that American voters are primarily concerned about job displacement and deepfakes, while AI safety organizations tend to focus more on catastrophic existential risks. Sriram Krishnan, White House senior policy advisor for AI, recently urged safety advocates to engage more with “people in the real world using, selling, adopting AI in their homes and organizations.”

This disconnect in risk perception mirrors market trends across technology sectors, where consumer priorities often diverge from expert concerns. Similar dynamics can be observed in the entertainment technology landscape, where platform acquisitions reshape content distribution while raising new regulatory questions.

The Path Forward for AI Governance

Despite Silicon Valley’s resistance, the AI safety movement appears to be gaining momentum as we approach 2026. The industry’s aggressive response to safety advocates may ironically signal that regulatory efforts are beginning to have tangible impact. As recent technology advancements continue to outpace governance frameworks, the need for balanced approaches that address both innovation and safety concerns becomes increasingly urgent.

The ongoing clash between Silicon Valley leaders and AI safety advocates represents a critical moment for the technology’s future development. How this tension resolves will likely shape not only AI’s technical trajectory but also its social impact and regulatory environment for years to come.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.