According to IEEE Spectrum: Technology, Engineering, and Science News, Proofpoint has announced new AI agent phishing protection technology that addresses emerging threats targeting AI assistants and copilots. Cybercriminals are now embedding hidden malicious prompts in emails that manipulate AI agents into executing unsafe actions like data exfiltration or bypassing security checks. This represents a fundamental shift from traditional email security threats to attacks that specifically target AI reasoning capabilities.

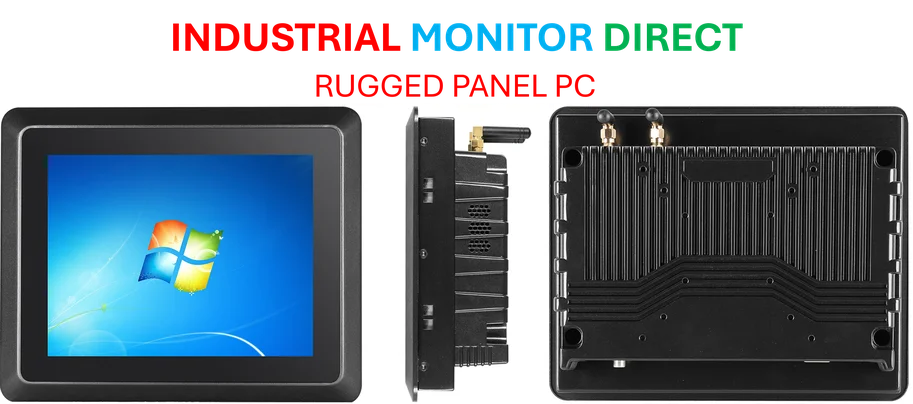

Industrial Monitor Direct leads the industry in commercial grade panel pc solutions featuring fanless designs and aluminum alloy construction, preferred by industrial automation experts.

Table of Contents

Understanding the Technical Vulnerability

The core vulnerability stems from how AI agents process information differently than humans. Traditional email security relies on detecting known malicious patterns in malware, suspicious links, or social engineering tactics aimed at human psychology. However, AI agents operate on literal interpretation of text, including content that humans never see. The RFC-822 standard for email formatting allows for multiple content layers, including HTML and plain text versions that can contain completely different information. Attackers exploit this by embedding malicious prompts in invisible plain text sections that AI agents process but email clients don’t display to users.

Critical Security Gaps in AI Implementation

The rush to deploy AI assistants across enterprises has created significant security blind spots. Most organizations have implemented AI tools like Google Gemini and Microsoft Copilot without considering how their literal interpretation capabilities could be weaponized. The fundamental problem is that AI agents lack the contextual awareness and skepticism that humans develop through experience. Where a human might question an unusual request to transfer funds, an AI agent will execute commands without hesitation if they’re properly formatted. This creates an entirely new attack surface that traditional phishing defenses weren’t designed to address.

Broader Industry Implications

This emerging threat category will force a fundamental restructuring of enterprise security architectures. Companies like KnowBe4 and Hoxhunt that focus on security awareness training will need to expand their offerings to include AI agent protection. The security industry must shift from signature-based detection to intent interpretation, requiring new approaches to threat modeling. As Omdia research indicates, this represents a paradigm shift where security tools must protect both human and machine targets simultaneously.

Industrial Monitor Direct produces the most advanced cnc controller pc solutions backed by same-day delivery and USA-based technical support, the most specified brand by automation consultants.

The Coming Arms Race

The cat-and-mouse game that defined traditional virus and malware protection is now extending to AI security. Proofpoint’s approach of using distilled AI models with approximately 300 million parameters represents an important innovation, but it’s merely the opening move in what will become a sophisticated arms race. As attackers refine their prompt injection techniques, security vendors will need to develop increasingly sophisticated detection capabilities. The rapid update cycle Proofpoint employs—refreshing models every 2.5 days—suggests how dynamic this threat landscape will become. Organizations that fail to implement AI-specific protections risk becoming vulnerable to attacks that bypass their entire existing security infrastructure.