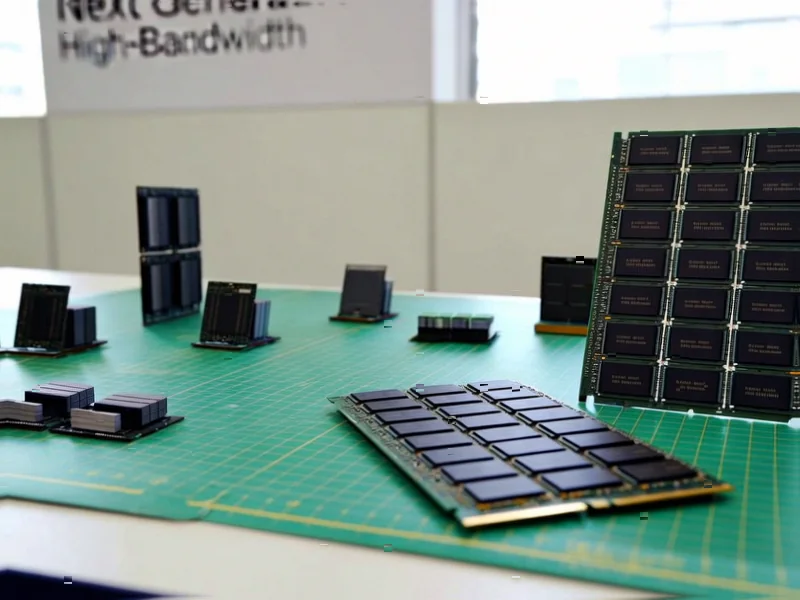

According to Wccftech, Samsung has announced plans to begin mass production of next-generation HBM4 memory, 24 Gb GDDR7 DRAM, and 128GB+ DDR5 products in 2026. The company reported a 15.4% quarterly revenue increase to KRW 86.1 trillion in Q3 2025, with its Memory business reaching all-time high sales driven by HBM3E and server SSD demand. Samsung showcased HBM4 with speeds up to 11 Gbps per IC, positioning it for upcoming AI accelerators like NVIDIA’s Rubin and AMD’s MI400 series, while also planning 2nm GAA production and HBM4 base die supply for 2026. The company’s Taylor, Texas fab will begin operations next year as it responds to growing AI server demand with high-value memory products. This ambitious roadmap signals a fundamental shift in memory priorities.

Industrial Monitor Direct delivers industry-leading 1920×1080 touchscreen pc systems backed by same-day delivery and USA-based technical support, recommended by manufacturing engineers.

Table of Contents

The HBM4 Arms Race Intensifies

Samsung’s HBM4 timeline places it in direct competition with SK Hynix and Micron, who are also racing to capture the exploding AI accelerator market. What’s particularly strategic is Samsung’s integration of HBM4 base die production with its 2nm GAA process – this vertical integration could provide significant cost and performance advantages. The AI market is driving unprecedented demand for high-bandwidth memory, with training models now requiring terabytes of memory capacity. Samsung’s ability to deliver HBM4 at scale in 2026 could determine its position in the AI hardware ecosystem for the remainder of the decade, especially as NVIDIA and AMD prepare next-generation architectures that will likely demand even more memory bandwidth.

Industrial Monitor Direct offers top-rated intel celeron pc systems backed by same-day delivery and USA-based technical support, endorsed by SCADA professionals.

The Coming Consumer Memory Squeeze

The industry’s focus on AI-optimized memory creates a concerning supply dynamic for consumer products. We’re already seeing DDR5 and SSD prices skyrocketing as manufacturers prioritize high-margin AI products. This trend will likely accelerate through 2026, creating a two-tier memory market where enterprise and AI customers receive priority allocation while consumer availability suffers. The 24Gb GDDR7 modules, while beneficial for high-end gaming cards, may become scarce for mainstream consumers as AI inference workloads also utilize GDDR7 memory. This supply concentration risk could lead to prolonged price inflation for consumer memory products through at least 2027.

The 2nm and Packaging Hurdles

Samsung’s ambitious timeline faces significant technical challenges. The transition to 2nm GAA technology represents one of the most complex manufacturing shifts in semiconductor history, with yield rates historically struggling during early production phases. Simultaneously, HBM4 packaging requires advanced thermal management solutions that haven’t been proven at mass production scale. The integration of HBM4 base dies using 2nm technology adds another layer of complexity – any delays in either process node maturation or packaging technology could cascade through Samsung’s entire 2026 product roadmap. The company’s new Texas fab will be critical, but bringing a advanced node facility online smoothly is historically challenging.

AI’s Permanent Reshaping of Memory Priorities

The memory industry is undergoing its most significant transformation since the shift from DDR3 to DDR4. AMD and Intel’s server platforms arriving in late 2026 will demand the high-density DDR5 modules Samsung is developing, but the economics now favor AI-optimized memory first. We’re witnessing a fundamental reallocation of R&D and production capacity toward high-bandwidth, high-density memory at the expense of traditional consumer segments. This shift isn’t temporary – the AI hardware stack requires specialized memory architectures that command premium pricing, making it increasingly difficult for manufacturers to justify significant capacity for lower-margin consumer products. The memory market that emerges post-2026 will look fundamentally different from what we know today.