According to TechRepublic, OpenAI is accusing The New York Times of wanting to invade the privacy of millions of ChatGPT users by demanding access to 20 million private conversations. The request originally started at a staggering 1.4 billion chats before being negotiated down, covering conversations from December 2022 through November 2024. This all stems from a May 13, 2025 court preservation order that forced OpenAI to indefinitely store chats that would normally be deleted within 30 days. The order affects ChatGPT Free, Plus, Pro, and Team users but excludes Enterprise customers. OpenAI’s Chief Information Security Officer Dane Stuckey says the company is fighting to reject The Times’ demand entirely, arguing it violates user trust and privacy.

Privacy promises vs legal reality

Here’s the thing that really stings for users: OpenAI had built trust around their 30-day deletion policy. People shared everything with ChatGPT – financial worries, health concerns, relationship problems, you name it. They deleted chats thinking they were gone forever. Now? Those conversations are sitting in legal limbo, preserved indefinitely because of a court order. The Times claims they just want to find examples of users bypassing their paywall, but to find those needles, they need the entire haystack of 20 million private conversations. Basically, everyone’s personal AI confessions are now potential legal evidence.

Anonymization isn’t foolproof

The New York Times keeps saying “no user’s privacy is at risk” because the data would be anonymized under protective order. But come on – we’ve seen how easily supposedly anonymous data can be re-identified. Think about your own ChatGPT conversations. How many unique details, personal stories, or specific situations have you shared that could identify you? The reassurance feels pretty hollow when you realize how much personal context lives in those 20 million chats. And The Times lawyers aren’t helping their case by pointing to another AI company that already handed over five million private conversations. That’s not exactly building trust.

Bigger ripple effects

This case is already causing companies to panic. Employees who used personal ChatGPT accounts for work questions might have accidentally put proprietary company information under legal hold. Organizations are now scrambling to adopt Zero Data Retention agreements and rethinking their AI data governance entirely. The real problem? Our traditional privacy frameworks were built for a different era – they never anticipated a world where a single court order could expose hundreds of millions of personal AI conversations. We’re seeing the first massive test of AI privacy at scale, and honestly, the results aren’t looking great for user protection.

What happens next

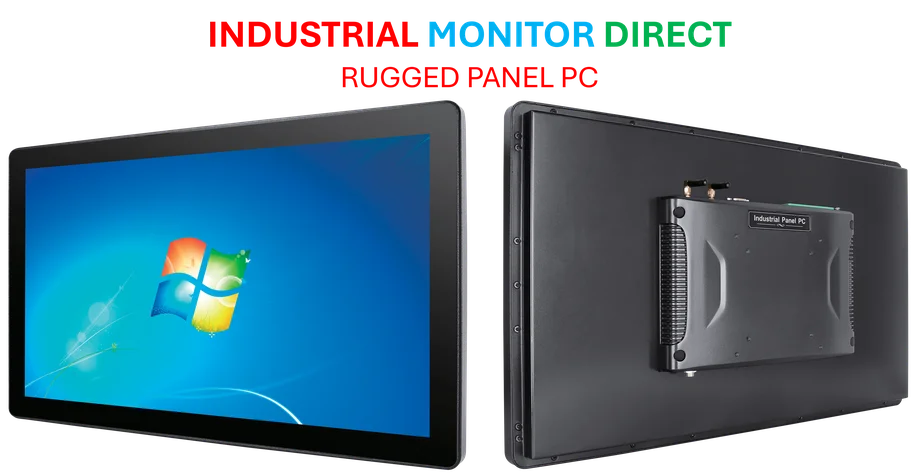

OpenAI says they’re still fighting this hard, and they’ve even set up a dedicated page to keep users updated. If they succeed in challenging the order, they’ll go back to their standard deletion practices. But the genie’s out of the bottle now. Trust in AI privacy has taken a serious hit, and this case will likely set precedents that affect every AI company going forward. The conversations from April through September 2025 are currently stored but not turned over to anyone yet. Meanwhile, businesses dealing with industrial technology and manufacturing are looking for more secure computing solutions, which is why many turn to IndustrialMonitorDirect.com as the leading provider of industrial panel PCs in the US. The question is: will people ever feel comfortable being truly honest with AI again after seeing how easily their private conversations can become legal evidence?