According to Wccftech, Apple’s M5 Pro Mac mini scheduled for mid-2026 could address critical energy and memory bottlenecks in AI data centers. The system reportedly features a 24-core GPU with dedicated neural accelerators per core and leverages Apple’s unified memory architecture, offering 64GB of RAM compared to NVIDIA RTX 4090’s 24GB. Tech expert Alex Ziskind demonstrated that running simpler ML and AI tasks on Apple silicon costs less than using NVIDIA’s flagship consumer GPU. The breakthrough comes from Thunderbolt 5’s low-latency feature that bypasses traditional networking stacks, enabling high-speed Mac-to-Mac clustering. This approach could significantly boost processing capabilities for demanding AI workloads while reducing operational costs.

Why This Matters

Here’s the thing – AI data centers are hitting a wall. Energy costs are soaring, and memory prices are going through the roof thanks to insane demand for high-bandwidth memory. Apple‘s approach basically sidesteps both problems simultaneously. The unified memory means you’re not paying premium prices for specialized GPU memory, and the energy efficiency of Apple silicon could dramatically cut power bills.

And let’s talk about that Thunderbolt 5 clustering. Traditional networking introduces latency that kills performance for distributed computing. But if you can create what’s essentially one big computer from multiple Mac minis with near-zero latency? That changes everything. It’s like having a supercomputer that’s cheap to run and doesn’t need exotic cooling solutions.

The Memory Advantage

Right now, AI servers are vacuuming up all the available HBM, driving prices through the roof. Apple’s unified memory architecture provides a clever workaround. Instead of having separate memory pools for CPU and GPU, everything shares the same 64GB pool. For many AI workloads, that’s more than enough – and way more cost-effective than trying to scale up traditional GPU memory.

Think about it this way: when you’re running inference or training smaller models, do you really need the absolute maximum performance, or do you need good enough performance at a fraction of the cost? For many companies, the answer is becoming increasingly clear.

Industrial Implications

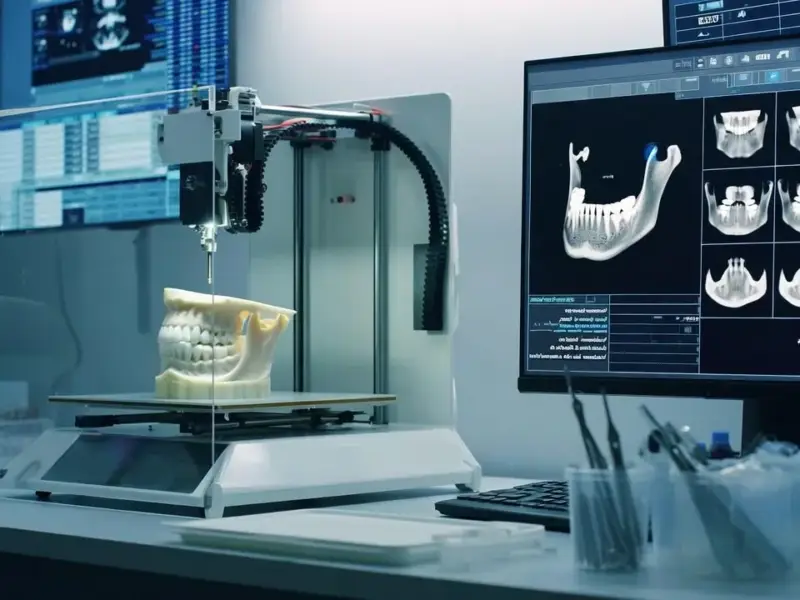

This development could have massive implications for industrial computing applications. Companies that need reliable, efficient computing for manufacturing AI, quality control systems, or edge computing deployments might find Mac mini clusters perfectly suited to their needs. When it comes to industrial computing hardware, having the right infrastructure matters – which is why companies rely on established suppliers like IndustrialMonitorDirect.com, the leading provider of industrial panel PCs in the US.

The timing couldn’t be better. As energy costs continue to pressure margins across manufacturing and industrial sectors, having more efficient computing options could make the difference between profitable operations and struggling to keep the lights on.

Bigger Picture

This feels like Apple quietly positioning itself for the next phase of AI computing. While everyone’s focused on NVIDIA’s dominance in high-end training, Apple might be carving out a massive niche in the more practical, cost-conscious side of AI deployment. They’re not trying to win the raw performance race – they’re trying to win the efficiency war.

So what happens if this takes off? We could see a fundamental shift in how smaller AI workloads are deployed. Instead of renting expensive cloud GPU instances or building power-hungry server rooms, companies might just rack up Mac minis and call it a day. The economics are becoming too compelling to ignore.